For the past year and a half, I have been working on an ongoing thesis project that seeks to test the feasibility of 3D laser scanning in a zooarchaeological context. I have been using the NextEngine HD Scanner and the MakerBot 3D Digitizer to scan bones from various species of waterfowl (Figure 1). You can read more about my project here:

Over the summer, I had the opportunity to work with Dr. Benjamin Ford to test 3D technologies on a much larger scale. In the process, I’ve acquired new knowledge about 3D techniques, and their response to different environments. The goal of this mini project was to create miniature 3D replicas of two buildings on the Indiana University of Pennsylvania’s campus, which included McElhaney Hall and Sutton Hall. After my first brain storming session, I thought that photogrammetry would be the best technique to construct the 3D models. Photogrammetry is a 3D modeling method in which you take multiple overlapping images around an object of interest. The researcher then imports the photographs into photogrammetry software, and the software uses references points from the photos to build a “mesh cloud” of the object.

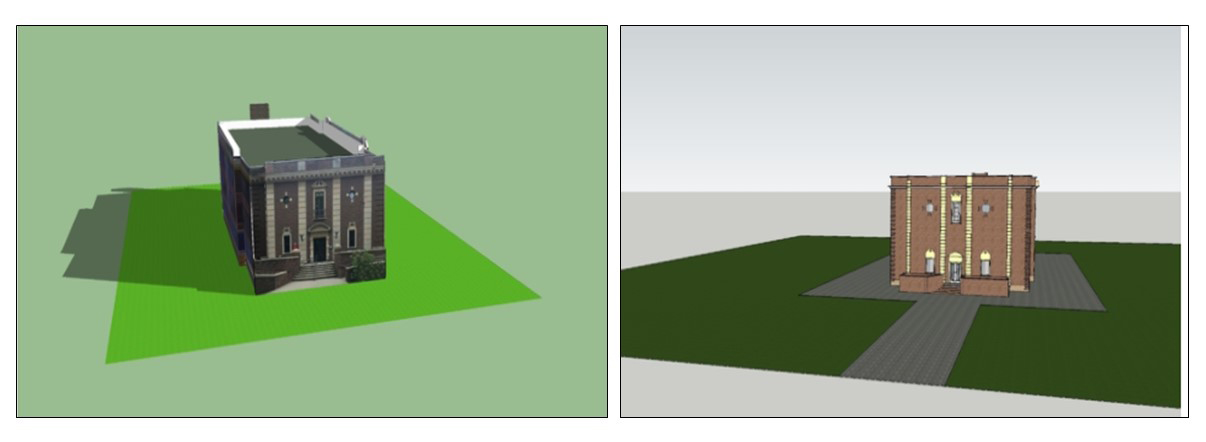

After multiple attempts of using this technique, I quickly discovered that it would not work with all of the beautiful foliage that encompasses the buildings. The shifting background lighting in the sky and the height of the building features also created additional issues when it came to stitching all of the images together. The resulting photogrammetry model of McElhaney (below) turned out slightly wavy and distorted in sections where tree limbs were obstructing features of the building. The use of drone technology may have alleviated some of these problems since a drone’s camera can capture detail that cannot be photographed from the ground. However, potential safety issues prohibit the use of drone technology near academic buildings. So… I had to get creative and use of a mixture of the photographs and pre-existing satellite imagery from Google Maps (2017) to construct the building models. I was able to pull the map data into a 3D modeling program called SketchUp, and I used the satellite maps to obtain reference points for the scale and size of the buildings. From there, I imported the photographs and manually overlay the architectural features (e.g., stairs, windows, and porches) of the buildings using the build tools in SketchUp. A comparison between the photogrammetry model and manually created SketchUP model are linked below. The rationale for manually adding in architectural features was to emphasize them for 3D printing

In the end, the 3D models of the buildings may not have turned out to be exact replicas of their original forms due to some of the issues mentioned above. However, this project has taught me a lot about the potential advantages and disadvantages of using 3D techniques at larger scales and changing environments. The resulting models are optimized for 3D printing, which means some of the textural features had to be simplified or eliminated to make the models more structurally sound and printer friendly. At present, I am using the MakerBot 3D Replicator to “build” miniature tabletop replicas of the buildings.

Visit the IUP Anthropology Department